Overview

GUM's big brother

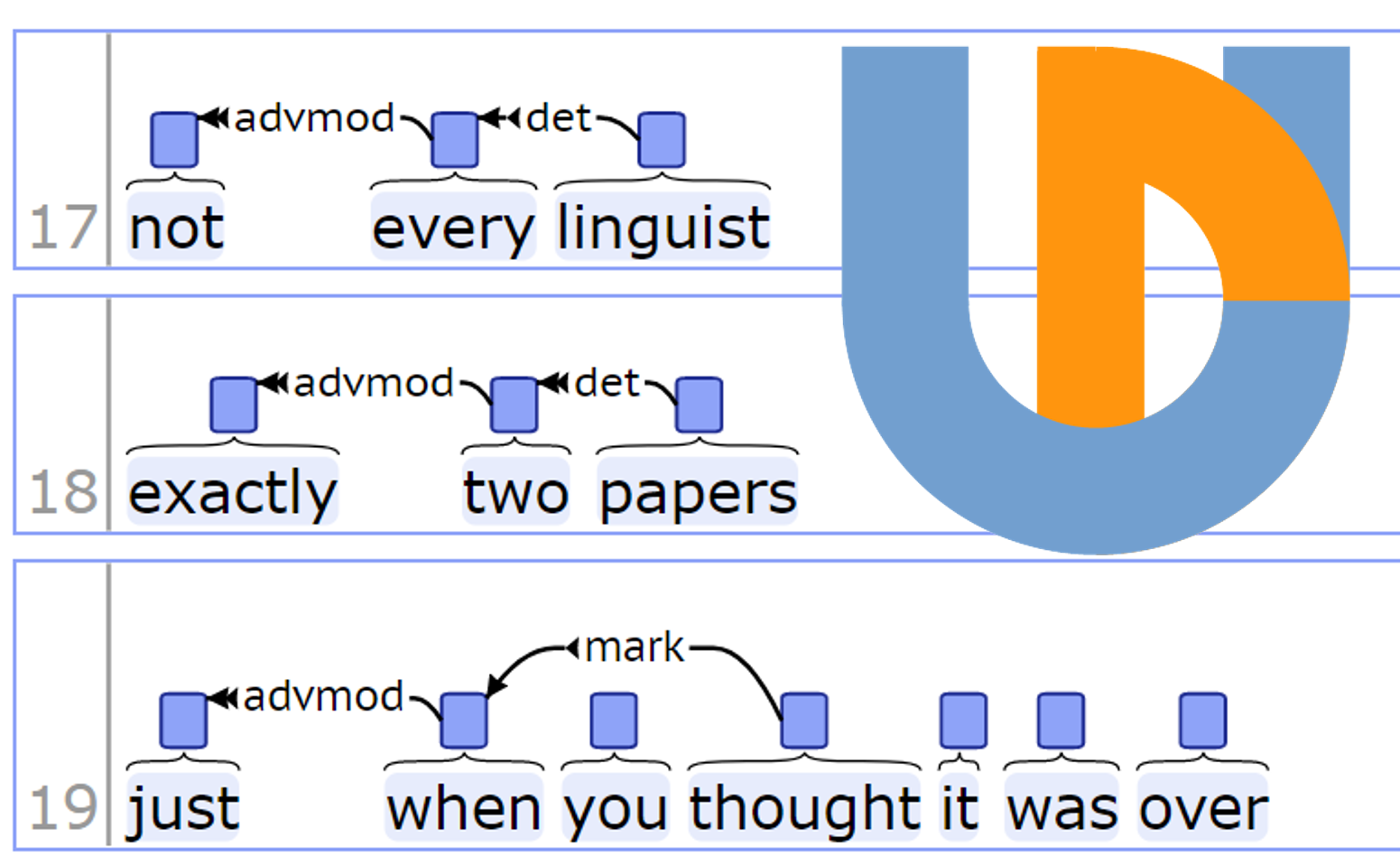

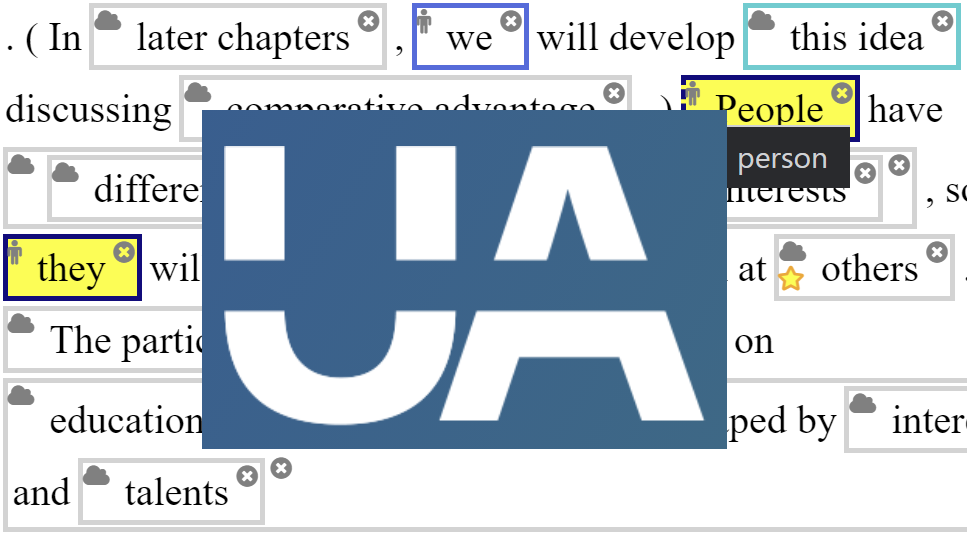

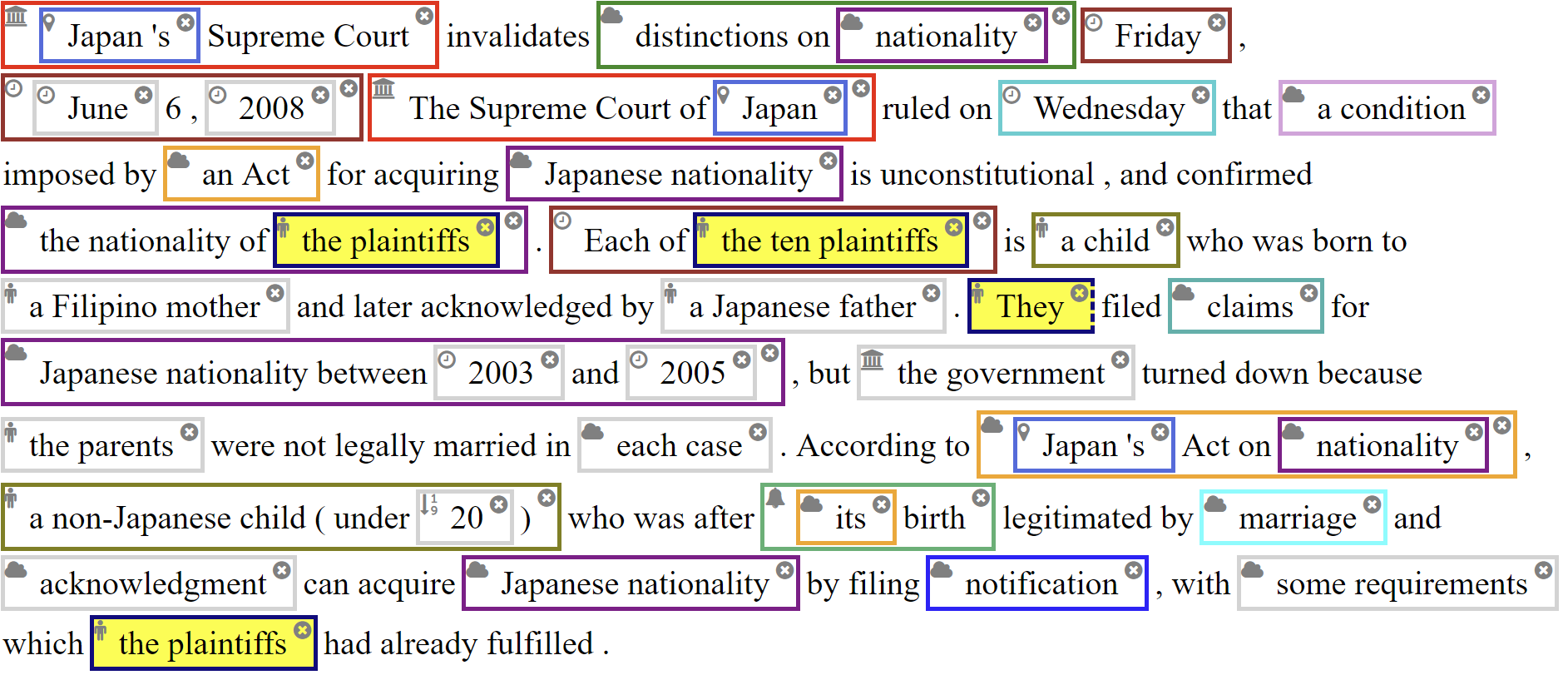

AMALGUM is a machine annotated multilayer corpus following the same design and annotation layers as GUM, but substantially larger (around 4M tokens). The goal of this corpus is to close the gap between high quality, richly annotated, but small datasets, and the larger but shallowly annotated corpora that are often scraped from the Web. In particular, we aim to make data available to support:

- Pretraining on large scale, silver quality data before fine tuning on smaller gold standard datasets

- Active learning to supplement training data and iteratively improve AMALGUM's own data

- Better-than-out-of-the-box quality NLP, using every possible trick as a tool and a target for NLP research

Composition

AMALGUM follows the same corpus design as GUM and currently contains the text types from the GUM version 6 series, with some different sources to allow for the larger scale:

| Text type | Source | Docs | Tokens | |

|---|---|---|---|---|

| Interviews | Wikinews |  | 778 | 500,090 |

| News stories | Wikinews |  | 778 | 500,090 |

| Travel guides | Wikivoyage |  | 482 | 500,680 |

| How-to guides | wikiHow |  | 613 | 500,014 |

| Academic writing | MDPI |  | 662 | 500,285 |

| Biographies | Wikipedia |  | 600 | 500,760 |

| Fiction | Project Gutenberg | 457 | 500,088 | |

| Forum discussions |  | 682 | 500,412 | |

| Total | 4,960 | 4,002,929 |