Congratulations on Corpling lab's system DeDisCo winning the DISRPT 2025 Shared Task on Discourse Relation Classification!

GUM is an open source multilayer corpus of richly annotated web texts from eight text types. The corpus is collected and expanded by students as part of the curriculum in LING-4427 Computational Corpus Linguistics at Georgetown University. The selection of text types is meant to represent different communicative purposes, while coming from sources that are readily and openly available (mostly Creative Commons licenses), so that new texts can be annotated and published with ease.

We add new data every year, and every three years we change the genres to expand in the next edition of GUM. This is how the data currently breaks down:

| Text type | Source | Docs | Tokens | |

|---|---|---|---|---|

| Academic writing | Various |  | 18 | 17,169 |

| Biographies | Wikipedia |  | 20 | 18,213 |

| CC Vlogs | YouTube |  | 15 | 16,864 |

| Conversations | UCSB Corpus |  | 15 | 17,932 |

| Courtroom transcripts | Various |  | 9 | 11,148 |

| Essays | Various |  | 9 | 10,842 |

| Fiction | Various |  | 19 | 17,511 |

| Forum |  | 18 | 16,364 | |

| How-to guides | wikiHow |  | 19 | 17,081 |

| Interviews | Wikinews |  | 19 | 18,196 |

| Letters | Various |  | 12 | 9,989 |

| News stories | Wikinews |  | 24 | 17,186 |

| Podcasts | Various |  | 10 | 11,986 |

| Political speeches | Various |  | 15 | 16,720 |

| Textbooks | OpenStax |  | 15 | 16,693 |

| Travel guides | Wikivoyage |  | 18 | 16,515 |

| Total GUM | 255 | 250,409 | ||

| OOD GENTLE | 26 | 17,799 | ||

| All partitions | 281 | 268,208 |

In addition to new documents in the same genres introduced in V10, the release of version 11 introduced an expansion to the summarization and salience annotations in GUM: documents now include 5 aligned summaries each. For the development and test sets, all summaries were written by humans using the summarization guidelines, and for the training set, LLM-generated summaries were manually corrected by humans to conform to the guidelines.

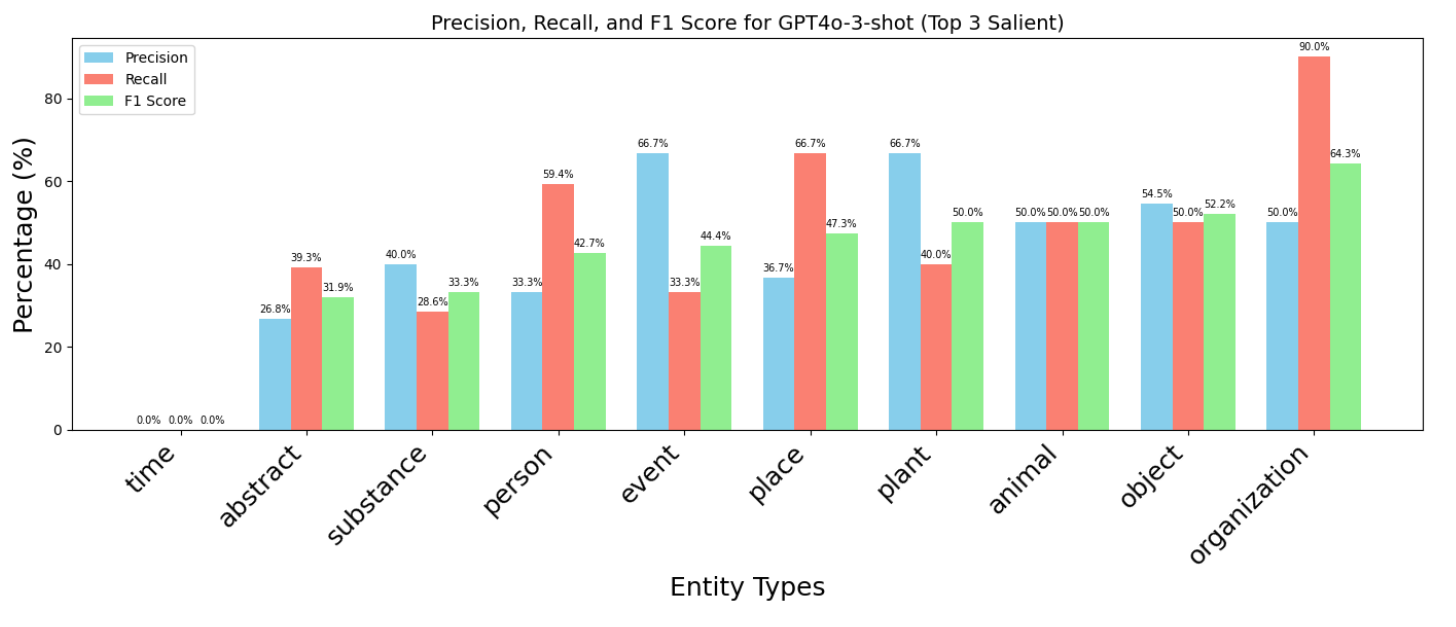

With the new summaries available, the salience annotation scheme in the corpus changed from binary labels (based on whether an entity was mentioned in the summary), to a graded salience labels based on scalar summary-worthiness: documents entities are each aligned to the summaries that mention them, producing a salience score between 0 (not mentioned in any summary) and 5 (mentioned in all summaries). You can read more about these annotations, including evaluations of LLM accuracy and a hybrid system to predict entity salience, in this paper.

GPT4o 3-shot performance on finding the top 3 most salient entities per document by entity type.

After three cycles working on conversations, vlogs, political speeches and textbooks, GUM V10 introduced four new genres, bringing the total up to 12. The newly added genres are again split between spoken and written text types and cover: courtroom transcripts, argumentative essays, letters and podcasts. This version also saw the introduction of the alternative PDTB v3 style shallow discourse relation annotation layer, released under the name GDTB: The Georgetown Discourse Treebank.

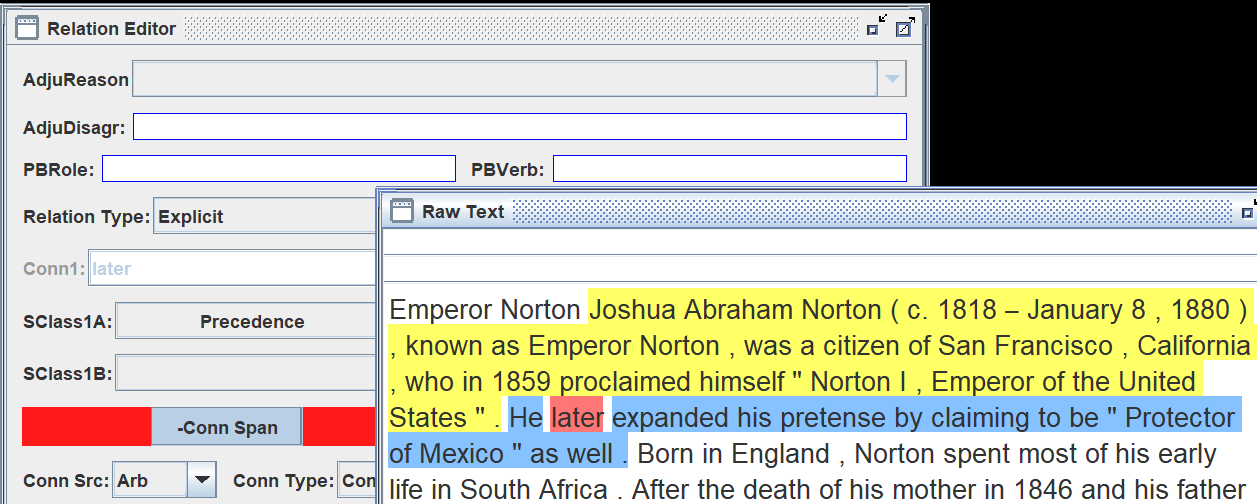

While Enhanced Rhetorical Structure Theory (eRST) interprets documents as a hierarchical graph structure, linking spans of text called Elementary Discourse Units (EDUs) through nested rhetorical relations, the Penn Discourse Treebank approach analyzes relations in isolation (without hierarchy) and focuses on pairwise links between spans that are either linked explicitly by a connective (e.g. "but" or "on the other hand"), or adjacent spans for which such a connective could be inserted. With the release of GDTB data following version 3 of the PDTB annotation guidelines, GUM can be used to directly compare how RST and PDTB based analyses converge and differ in describing discourse relations in text.

Shallow discourse relation annotations in the GDTB layer of GUM.

Version 9 of GUM has more data from the same genres as Version 8, which were introduced in Version 7, but focuses on new annotations and an expansion of the RST framework called eRST (Enhanced Rhetorical Structure Theory). New annotation types in this version include: abstractive summaries for each document (later expanded to 5 summaries, see above), binary salience annotations (later expanded to graded salience, see above), Construction Grammar annotations, and morphological annotations such as morphological segmentation (MSeg) and foreign language annotations.

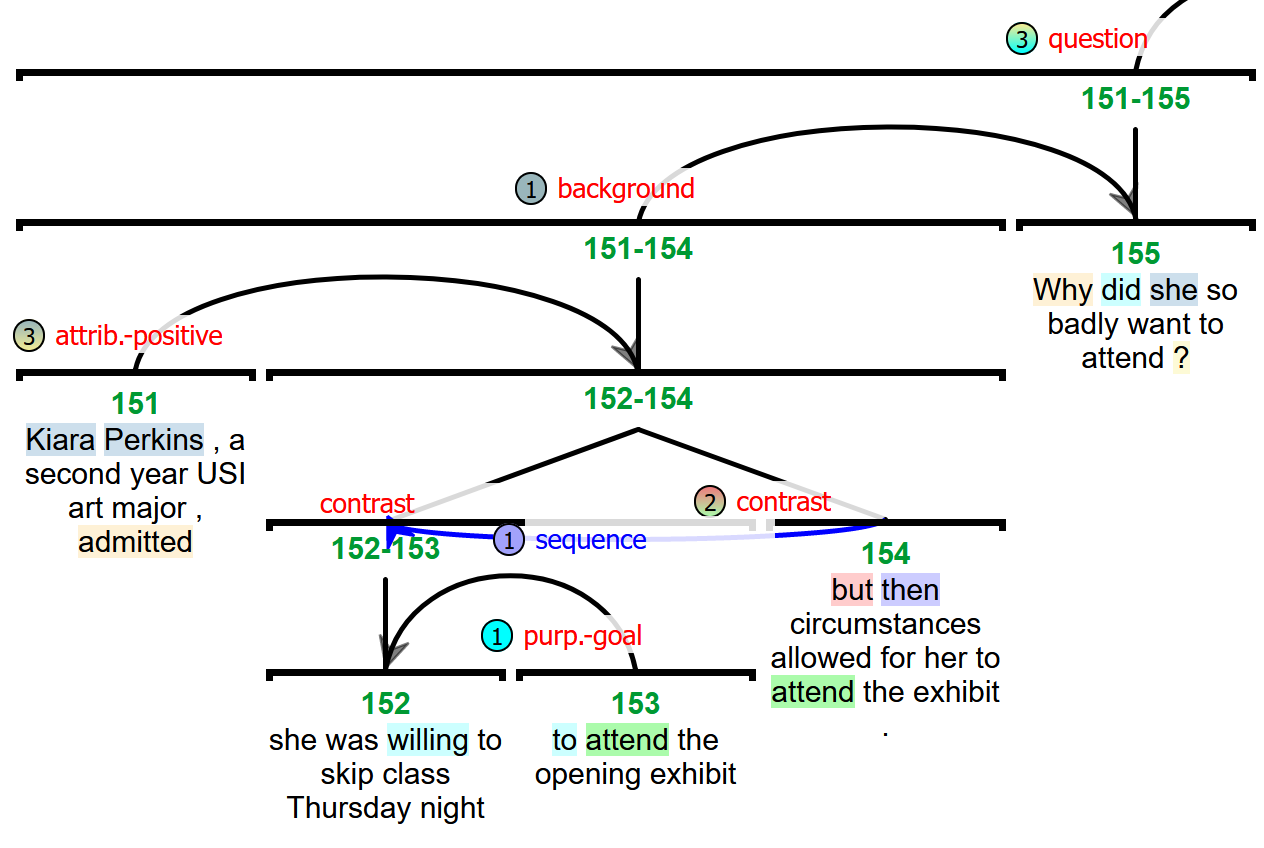

With the addition of eRST, discourse graphs in the corpus have been enhanced to include multiple concurrent and non-projective discourse relations superimposed on the RST tree, as shown in the figure below. The eRST graphs also include typed and sub-categorized signal annotations indicating how each discourse relation is signaled, including discourse markers (connectives like "but" for contrastive relations or "then" for temporal sequence), lexical signals, coherence based on coreference, graphical signals, syntactic devices and more. For example, the question in the figure is signaled by a the wh-word "why", by the subject-auxiliary inversion ("why did she") and by the graphical questions mark. These annotations allow us to study relation signaling strategies and ambiguity across genres. To learn more about eRST, check out our CL journal paper!

An eRST graph fragment. Numbers next to edges indicate how many signals have been identified. Signal categories and their positions are indicated by colored highlights. Tree-breaking secondary edges are drawn in blue.

Beginning with Version 9, each document in GUM received one abstractive summary following strict guidelines - summaries are restricted to a single sentence, max 380 characters, and should preserve word choices from the document while only mentioning things mentioned in the document itself. These summaries allowed us to study how summarization works across genres, and formed the basis of our initial round of binary salience labels, using the idea that if an entity is salient to a document, then it will be difficult to summarize it without mentioning it.

In Version 9.2, we added Construction Grammar annotations using the new UCxn scheme, marking up constructions such as resultatives ("hammer the metal flat"), causal excess ("so big that it fell over") and more - see the complete current list here.

This version also saw the introduction of morphological segmentation information for tokens base on Unimorph, illustrated below:

In Version 8 of GUM, we introduced a reworked hierarchical system of RST discourse relations (32 labels), added entity annotations according to Centering Theory, updated the Universal Dependencies scheme for syntax annotations, added WikiData identifiers to the Wikification annotations, and expanded the data with more documents in the same genres introduced in Version 7.

Beginning with Version 8, the RST discourse relation inventory in GUM is hierarchical, including 15 major types and 32 total subtypes:

|

|

Centering Theory is a model of local discourse coherence that explains how speakers maintain and shift attention between entities across sentences. Each sentence is associated with a set of forward-looking centers (Cf), which are the entities it introduces or mentions, ranked by prominence (typically grammatical roles like subject > object). One of these entities is designated as the preferred center (Cp), and the unique backward-looking center (Cb) connects the sentence to the previous one by identifying the entity currently in focus. The theory classifies transitions between sentences—such as Continue (same Cb and Cp), Retain (same Cb but different Cp), and Shift (different Cb)—based on the relationship between the Cb of the current sentence and that of the previous sentence, as well as how the current Cp aligns with them. These transitions help predict discourse coherence and pronoun resolution preferences.

Starting in Version 8 of GUM, all entities are ranked for Cf status in each sentence, and the unique Cb is selected if available. Entities are given annotations such as Cf3 (rank 3 forward-looking center), or Cf1*, where the * indicates that this is also the backwards-looking center. Transitions between sentences are computed based on these using the seven transition types summarized in Poesio et al. (2004).

Centering Theory annotations in GUM8.

We can see how transitions vary between genres quite strongly - the following association plot of Chi Squared residuals indicates for example that biographies follow Centering Theory coherence most closely by strongly preferring "continue" transitions, while conversations contain the most disjointed "null" transitions.

Centering Theory transitions vs. genres in GUM8.

The release of GUM series 7 added four more genres to our multilayer corpus, in addition to brand new annotation layers, corrections, and more.

Since version 7 we've started to focus more on spoken materials, and the new genres include face to face converations, political speeches, open access textbooks and YouTube vlogs.

This version added a new annotation type, which we've retrofitted our old annotations to include as well: Named Entity Linking, or more specifically Wikification. This adds links to Wikipedia for all named entities that have a corresponding Wikipedia article. Unlike other Wikified datasets, GUM includes not only 'atomic' named spans, where a person's name might be linked to Wikipedia, but thanks to our nested coreference annotations, also all mentions of a wikified entity, including pronominal and common noun cases, as well as mentions within mentions. This means the "she" might be linked to French actress Jeanne Moreau in context, and that mentions like the [United States [Congress]] will include links to both entities' Wikipedia pages. Many thanks to Jessica Yi-Ju Lin for working on this project!

Nested Wikification of mentions of Jeanne Moreau, an award she won, and the festival and location where she won it.

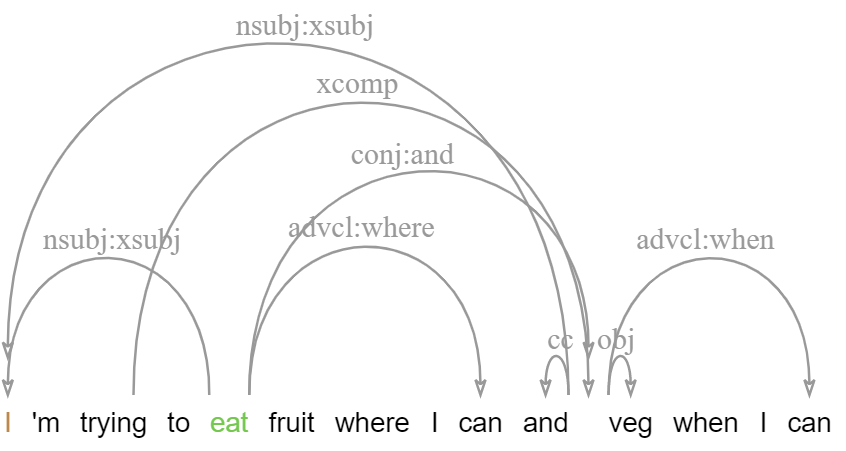

The new enhanced dependencies layer adds an additional graph representation with structure sharing, which more closely reflects semantic argument structure (see the guidelines). This layer is produced semi-automatically by propagating structure sharing across coordination, subject and object control and more, and is then adjusted including the introduction of 'virtual' tokens to cover ellipsis, gapping, right-node-raising and related phenomena. This layer also provides augmented label types including lexical subtypes, such as obl:on to indicate an oblique PP modifier headed by 'on', or conj:or to indicate a disjunction marked by 'or'.

Following work by Nitin Venkateswaran, the new release has much more extensive date/time annotations, including normalization for seasons and parts of the day (e.g. annotating any 'summer' as not before beginning of June, and not after end of August, etc.). The new data follows practices in other time annotated corpora more closely, and will be used to evaluate day/time prediction accuracy for automated datasets, such as our AMALGUM corpus.

Constituent trees in GUM are automatically parsed, except for a small subset of manually annotated test documents, using high accuracy parsing from gold POS tags. Recent advances in parsing have meant that we can now get even more accurate parses, which are now produced by the state of the art neural parser from Mrini et al. (adapted to the GUM build bot by Nitin Venkateswaran).

Another addition in this version is the incorporation of consituent function labels following the original Penn Treebank phrase function labels, such as NP-SBJ for subject NPs, subtypes of adverbials and PPs (ADVP-TMP, ADVP-MNR, PP-LOC, PP-DIR etc.). The GUM build bot now projects function labels onto the constituent trees, which are searchable in ANNIS as shown below.

Constituent trees enriched with functional labels, such as SBJ, NOM, DIR.

Since GUM6, we have been working on better convergence with existing standards, including revising RST segmentation to match the RST Discourse Treebank exactly, as well as convergence in POS tagging and dependency parsing with OntoNotes and the English Web Treebank.

As part of our efforts to match UD annotation conventions, we have completely reimplemented our morphological tagging pipeline, which now outputs all features expected by Universal Dependencies, including propagation of person, number and case information, including on unmarked verb forms (VBD, VBP), which now indicate the subject person and number.

Morphological annotations following the UD standard.

Since GUM7 the corpus better separates split antecedent anaphora, illustrated below, with a special information status value split, as well as a dedicated edge type.

Goodehas been in a relationship withSophie Dymokesince 2005.Theymarried in 2014.(They split-refers to → Goode, Sophie Symoke)